Spark Core Freezer Monitoring Success

Monday, 03 February 2014

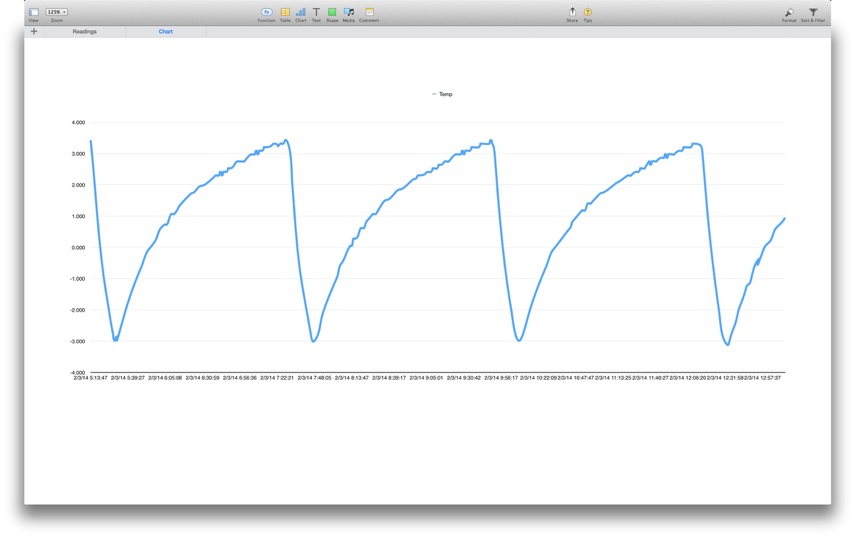

After installing the Spark Core as a freezer monitor last night, I ran curl in a while loop in bash to pull the temperature reading every minute overnight and append the results to a text file. This morning, after a little bit of sed and grep tweaking, I pulled the readings into Numbers and charted them:

You can see that the temperature rises to around 3℉. The compressor then kicks in and lowers the temperature to around −3℉. The temperature then slowly rises up to around 3℉ again, and so on — illustrating the hysteresis of the temperature control system in the freezer.

I wrote a Python script to automate the process of grabbing the result, parsing the JSON and adding the result to the table being charted in the spreadsheet:

This isn’t particularly practical, but was interesting to see nonetheless. My main goal is monitoring, so I will drop the charting part in favour of a simple notification to my phone via Prowl.app when the temperature gets too high.

You can see that the temperature rises to around 3℉. The compressor then kicks in and lowers the temperature to around −3℉. The temperature then slowly rises up to around 3℉ again, and so on — illustrating the hysteresis of the temperature control system in the freezer.

I wrote a Python script to automate the process of grabbing the result, parsing the JSON and adding the result to the table being charted in the spreadsheet:

This isn’t particularly practical, but was interesting to see nonetheless. My main goal is monitoring, so I will drop the charting part in favour of a simple notification to my phone via Prowl.app when the temperature gets too high.

Spark Core

Sunday, 02 February 2014

My Spark Core modules arrived a week or so ago. I got one with a chip antenna and one with a u.FL connector, because I plan to use it in a building that’s too far from my router for the chip antenna to work. I also picked up a battery board and relay board, although I don’t have specific projects in mind for either.

The Spark Core contains an STM32F103 microcontroller (ARM Cortex M3) paired with a TI CC300 WiFi module. An iPhone app lets you easily gets your cores onto your network. They come with a bootloader that supports reflashing over WiFi. In fact Spark even provides an IDE that allows you to edit code and flash it to any of your modules from the comfort of your favourite browser.

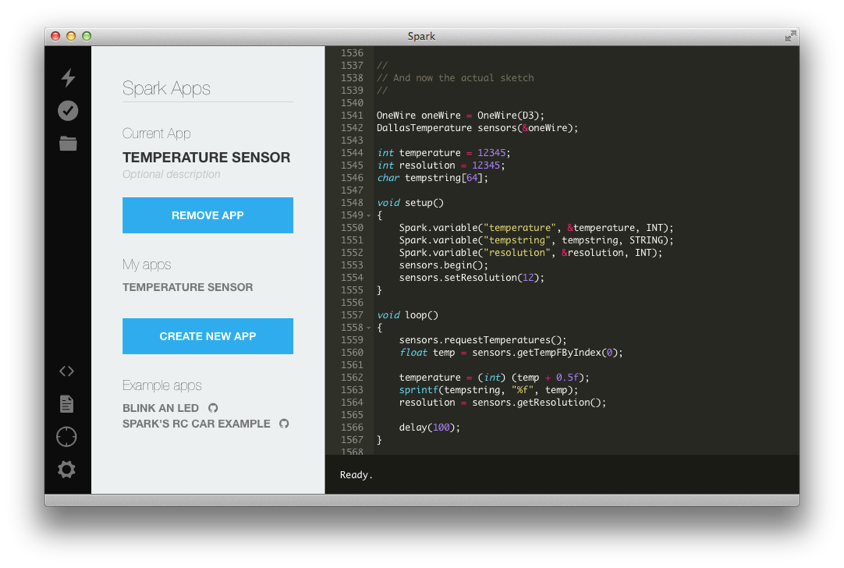

Spark have made the Spark Core as compatible with Arduino as they can, so many Arduino libraries should work providing they aren’t doing anything AVR-specific, although currently the IDE doesn’t support multiple files so you’ll need to copy-paste the library code. Despite the single-file limitation, which they’re working on, the IDE is pretty nice: it even supports some Emacs key bindings. The IDE also allows you to expose variables from the Spark Core; these variables can be accessed via a REST API on Spark’s servers, which mean you don’t need to configure tunneling or DynDNS for your cores.

I already had a Maxim DS18B20 1-Wire digital thermometer chip lying around, and it took just a few minutes to get that temperature sensor working thanks to Miles Burton’s Dallas Temperature Control Library for Arduino.

The non-library part of the sketch is shown above. It registers an integer variable called temperature in setup(), which is then populated each time around in loop().

Here’s a sample of the JSON you get back from querying that temperature variable:

$ curl "https://api.spark.io/v1/devices/1234567abcdef/temperature?access_token=feedbeef”

{

"cmd": "VarReturn",

"name": "temperature",

"result": 3,

"coreInfo": {

"last_app": "",

"last_heard": "2014-02-03T04:30:09.921Z",

"connected": true,

"deviceID": "1234567abcdef"

}

}

Between the REST and the JSON it’s pretty trivial to digest the output programmatically. I put together a little CGI script in Python to make it easy to query the temperature:

After I had the breadboarded sensor working, I prepared it for duty monitoring the temperature of the freezer in our garage, I put the DS18B20 on the end of a cable with some heat shrink over the connections and mounted the Spark Core in an old iPod touch box, with holes drilled for the sensor cable, antenna and USB power cord. Here it is, gently clamped to the top of the USB power adaptor: